Remote production with multiple video sources has an known issue that may affect the perception of the viewers. Each source (camera or encoder) delivers the stream with its own delay relative to the time when it was shot and that delay is typically different across devices. Thus when the first stream is delivered with half-second delay and another one is a second away from it, with both cameras showing the same object from different angles, a viewers will see a significant difference. That becomes critical in some intense real-time events like sport matches.

So producers and technicians need to have the way to synchronize all sources in order to use them in a single time scale. The industry-proven way to solve this is to have this kind of setup:

- All sources are set to use the same reference time, e.g. get it from the same NTP server.

- Each source inserts SEI metadata into the stream's content frames when encoding the output.

- Destination media decoder is set to have a certain time window (a delay) before sending the content further.

- Decoder gets SEI of frames of all sources, holds frames with respective timestamp and send them out at the same time when the delay is over.

Nimble Streamer allows using that approach and handles the following two use cases related to SEI metadata.

- Synchronize NDI multiple output streams. If Nimble Transcoder scenario has certain settings (described further), then Nimble will process SEI metadata and will delay the output to provide simultaneous NDI outputs.

- Forward SEI metadata. Nimble Live Transcoder may get SEI metadata from source frames and add it into the output content, i.e. simply pass it though regardless of the output protocol. This is useful if you need to process the content with Nimble Transcoder but then send it out for further processing.

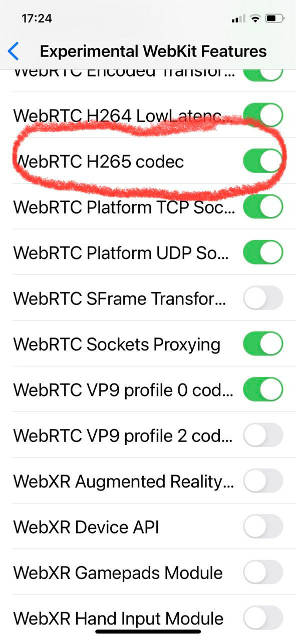

Both H.264/AVC and H.265/HEVC video codecs are supported for SEI metadata extraction.

Let's see the setup and usage process. We also added a video tutorial with sync demo below.

Prerequisites

Both cases are set up in Nimble Streamer Live Transcoder so make sure you have the following:

- latest version of Nimble Streamer is installed.

- Live Transcoder add-on is installed.

- active Transcoder license is paid and is registered on your Nimble Streamer instance.

This instruction assumes that you've already set up live stream input with the protocol of your choice. Take a look at the live streaming digest page to find proper instructions, like SRT full setup instruction, RTMP setup article and more.

In our example we've already set up our incoming stream "/live/stream1" as example.

Make sure your source video streams have SEI metadata and they all are synced with the same NTP server.

Nimble Streamer uses system time, so make sure your server's OS is also synced up via NTP.

Set up transcoder scenario and decoder element

First, let's add transcoder scenario for our first stream. You can watch our Transcoder tutorial videos to see how it's usually done. We go to "Transcoders" menu and click on "Create new scenario" button.

In this new scenario, add a new Video source element which represents decoder settings. Put first stream's app name and stream name in respective edit boxes.

Then click on "Forward SEI timecodes" checkbox.

If that parameter is set, the Transcoder will take SEI data into further processing.

Notice that you can use any decoder for extracting SEI metadata, except for "quicksync" at the moment.

NDI output setup

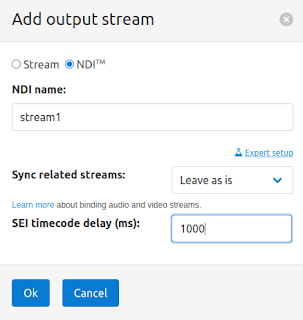

To create NDI output, add a Video output element, check NDI there, then add the name of the output.

Then click on Expert setup link to open additional parameters. There you will find "SEI timecode delay (ms)" edit box.

This delay parameter defines how much time the Transcoder will wait from SEI timecode of a received frame before sending the content to the output stream. Nimble will wait for frames to arrive within a "delay" time frame and then will send them out. Thus if you define the same delay for several output streams in Transcoder, they will be sent out to their respective NDI streams at the same time even if some of the frames were delivered with some latency.

Since NDI has just a few milliseconds of delay in delivery within a local network, your production software will receive all your streams simultaneously.

In our example we set this delay to 1 second to make sure all source streams are delivered regardless of their own latency and network conditions.

You need to check network conditions between your streams' sources and other parameters that may require to increase the timecode delay. If you use SRT, check what values of "latency" parameters are used for your senders: your delay must be larger than that with some spare time added.

As for NDI in general, you can read NDI setup article and watch NDI video tutorials playlist to learn more about the setup and usage process.

Add audio

Don't forget about audio content in your Transcoder scenario.

- For NDI audio output, add a decoder element with default settings and an NDI encoder, just like you did for video.

- For other types of audio output, you can either make a passthrough, or create a decoder-encoder couple if you need to transform the audio.

Set up all streams

Once you save the scenario and the settings are applied to your server, you need to create other scenarios for all other streams, all having the same delay setting. As soon as you apply them, all of them will be in sync with each other.

Forward SEI metadata

If you have an existing Transcoder scenario and you want to make sure the SEI metadata is kept intact, you can set up proper forwarding in Video output element under Expert setup section.

Currently SEI forwarding is supported for libx264 and NVENC encoding libraries only.

With that option enabled, the resulting output H.264 stream will have SEI time metadata. The recipient encoder will need to take care of synchronizing the streams by itself.

Passthrough

If you need to create a scenario with Passthrough element for video in it, then your SEI metadata will be automatically passed through Transcoder without any modification. You don't need to do anything specific in this case.

Video: tutorial with demo

Watch how this can be set up, using Makito X4 encdoer as a source.

That's it. Later on we'll introduce a video tutorial to show the setup in details.

Meanwhile feel free to try this feature and let us know if you have any additional thoughts on it.

Follow us in social media to get updates about our new features and products: YouTube, Twitter, Facebook, LinkedIn, Reddit, Telegram

Related documentation

Nimble Streamer, Live Transcoder, NDI support in Nimble, Our YouTube channel