This first quarter of 2020 brought a lot of disruption into lives of billions of people. The unprecedented global measures to reduce the harm from pandemic require a lot of businesses to move online, work remotely and use live streaming more intensively.

Softvelum is fully committed to provide the best support to our customers, as always. Since the early days of inception, we were working remotely full-time. We were building and adjusting our business processes to keep the efficiency while expanding our team. Now when we have to self-isolate in order to stay healthy, we keep doing what we used to do through all these years, keeping the level of support on the same highest level. Feel free to contact our helpdesk and take a look at the list of our social networks at the bottom of this message to stay in touch with us.

With the extreme rise of interest for live streaming, we keep working on new features, here are updates from this quarter which we'd like to share with you.

Mobile products

Mobile streaming in on the rise now, so we keep improving it.

SRT

SRT protocol is being deployed into more products across the industry. Our company was among the first to implemented it into our products, and now we see more people building their delivery networks based on this technology. So we've documented this approach:

Live Transcoder

We are continuously improving Live Transcoder so this quarter we made a number of updates to make it more robust and efficient. Here are the latest features we've made.

Nimble Streamer

Read SVG News article about how Riot Games build their streaming infrastructure with various products, including Nimble Streamer.

A number of updates are available for Nimble Streamer this quarter:

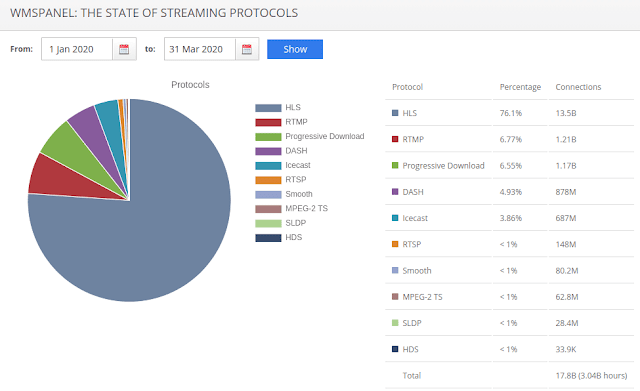

Also, take a look at the State of Streaming Protocols for 2020Q1.

If you'd like to get our future news and updates, please consider following our social networks. We've launched a Telegram channel recently and we now make more videos for our YouTube channel. As always, our Twitter, Facebook and LinkedIn feeds keep showing our news.

Stay healthy and safe, our team will help you carry on!

Softvelum is fully committed to provide the best support to our customers, as always. Since the early days of inception, we were working remotely full-time. We were building and adjusting our business processes to keep the efficiency while expanding our team. Now when we have to self-isolate in order to stay healthy, we keep doing what we used to do through all these years, keeping the level of support on the same highest level. Feel free to contact our helpdesk and take a look at the list of our social networks at the bottom of this message to stay in touch with us.

With the extreme rise of interest for live streaming, we keep working on new features, here are updates from this quarter which we'd like to share with you.

Mobile products

Mobile streaming in on the rise now, so we keep improving it.

- We appreciate vMix for creating a video tutorial about setting up streaming from Larix Broadcaster to vMix via SRT. A detailed review shows SRT in use for interacting mobile streamers with vMix-powered production team.

- Synchronized playback on multiple devices is now available as part of SLDP technology. You can stream simultaneously from Nimble Streamer to SLDP Player on HTML5 web page, Android and iOS for better user experience. View video demonstration of this feature in action.

- We've made a detailed setup article about SLDP Player and a video tutorial.

- Larix Broadcaster now supports streaming to Akamai-powered services like Dacast, you can read setup article for details.

- Find more examples of streaming setup in Larix documentation reference.

SRT

SRT protocol is being deployed into more products across the industry. Our company was among the first to implemented it into our products, and now we see more people building their delivery networks based on this technology. So we've documented this approach:

- Glass-to-Glass Delivery with SRT: The Softvelum Way - a post for SRT Alliance blog about building delivery from mobile deice through Nimble media server into mobile player.

- Glass-to-glass SRT delivery setup - a post in our blog describing setup full details.

- All of our products - Nimble Streamer, Larix Broadcaster and SLDP Player - now use the latest SRT library version 1.4.1.

- Just in case you missed, watch vMix video tutorial for streaming from Larix Broadcaster to vMix via SRT, which can also be used a the source for such delivery chain.

Live Transcoder

We are continuously improving Live Transcoder so this quarter we made a number of updates to make it more robust and efficient. Here are the latest features we've made.

- You can now create transcoding pipelines based only on NVENC hardware acceleration which works for Ubuntu 18.04+. Read this setup article for more details.

- FFmpeg custom builds are now supported. This allows using additional libraries that are not supported by Transcoder at the moment. Read this article for setup details.

- Transcoder control API is now available as part of WMSPanel API. It's a good way to automate some basic control operations.

Nimble Streamer

Read SVG News article about how Riot Games build their streaming infrastructure with various products, including Nimble Streamer.

A number of updates are available for Nimble Streamer this quarter:

- HbbTV MPEG-DASH support is available in Nimble Streamer

- Read about fallback of published RTMP, RTSP and Icecast streams.

- Learn how to use Certbot with Nimble Streamer working port 80.

Also, take a look at the State of Streaming Protocols for 2020Q1.

If you'd like to get our future news and updates, please consider following our social networks. We've launched a Telegram channel recently and we now make more videos for our YouTube channel. As always, our Twitter, Facebook and LinkedIn feeds keep showing our news.

Stay healthy and safe, our team will help you carry on!