.

Some complex transcoding scenarios may result excessive load on the hardware which may affect the performance and result errors. So you may find the following lines in Nimble Streamer logs: "Failed to encode on appropriate rate" or "Failed to decode on appropriate rate". This is a known issue for NVENC.

There are two approaches to solving this problem.

First approach is to

use shared contexts.

Please read the "

NVENC shared context usage" article to learn more about it and try it in action.

Another approach is to use NVENC contexts caching mechanism in Nimble Streamer Transcoder. It creates encoding and decoding contexts at the start of the transcoder. So when the encoding or decoding session starts, it picks up the context from the cache. It also allows reusing contexts.

The following nimble.conf parameters need to be used in order to control NVENC context.

configuration parameters reference for more information about Nimble config.

Use this parameter to enables the context cache feature:

nvenc_context_cache_enable = true

In order to handle context cache efficiently, the transcoder needs to lock the calls for NVidia drivers APIs to itself. This allows making the queue for the contexts creation and control this exclusively which improves the performance significantly. This is what the following parameter is for.

nvenc_context_create_lock = true

You can set transcoder to create contexts at the start. You can specify how many contexts will be created for each graphic unit for encoding and for decoding sessions.

Common format is this

nvenc_context_cache_init = 0:<EC>:<DC>,2:<EC>:<DC>,N:<EC>:<DC>

As you see it's a set of triples where first digit defines the GPU number, EC is encoder contexts umber and DC is decoder contexts number.

Check this example:

nvenc_context_cache_init = 0:32:32,1:32:32,2:16:16,3:16:16

This means you have 4 cards, first two cards have 32 contexts for encoding and 32 for decoding, then other two cards have 16 contexts respectively.

When a new context is created on top of those created on transcoder start, it will be released once the encoder or decoder session is over (e.g. the publishing was stopped). To make those contexts available for further re-use, you need to specify this parameter.

nvenc_context_reuse_enable = true

We recommend to use this option by default.

So as example, having 2 GPUs your config may looks like this:

nvenc_context_cache_enable = true

nvenc_context_create_lock = true

nvenc_context_cache_init = 0:32:32,1:32:32

nvenc_context_reuse_enable = true

That's it, use those parameters for the cases when you experience issues with NVENC.

Getting optimal parameters

Here are the steps you may follow in order to get the optimal number of decoding and encoding contexts.

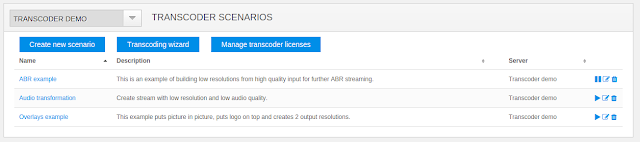

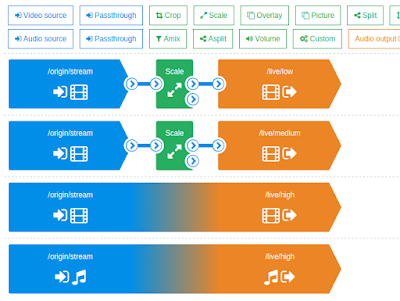

1. Create transcoding scenarios for the number of streams which you plan to transcode, set decoding and encoding via GPU.

2. Enable new scenarios one by one with 1 minute interval and check Nimble logs after each scenario is enabled. If you don't see any errors, then keep adding the streams for transcoding.

3. You will load your GPU more and more until you get errors. It's important not to enable them all at once because it takes more time for each new context to create, as stated earlier in this article.

4. Once you start getting errors in log, stop Nimble Streamer, then set

nvenc_context_cache_init to some the value same as the number of streams which failed to transcode. Also set other parameters (

nvenc_context_cache_enable, nvenc_context_create_lock, nvenc_context_reuse_enable) to corresponding values.

5. Start Nimble Streamer and check /etc/logs/nimble/nimble.log for any errors. If you find transcoding-related errors, decrease the cache context value until you see to errors on Nimble Streamer start.

6. Once you see no issues on start, you need to create a scenario which contains decoding for the same number of streams as you have for decoding contexts. E.g. if it's 0:0:20 cache, make scenario with 20 streams for decoding and encoding into any rendition.

7. Start scenario and check the log. If you see decoding or encoding errors, then remove streams from your scenario until you have no errors. Check the stream after that to make sure you have no artifacts.

8. The number of processed streams will be your maximum number of decoding contexts.

The idea is to load the GPU without caching until getting errors in log. Once the errors are seen, you try setting up context caching to proper values until no errors are seen as well.

That's it. Now check examples context caching for various NVidia cards.

Examples

Here is a short extract of some configurations for known cards - they were tested in real scenarios. To use your GPU optimally you need to perform some tests with GPU without context caching and then set nvenc_context_cache_init to values you find optimal during your tests.

Quadro P5000 (16GB)

FullHD (1080p), high profile.

The maximum number of decoding contexts is 13. If you want to use Quadro only for decoding you can use

nvenc_context_cache_init = 0:0:13

If you want to use NVENC for both decoding and encoding we recommend to use

nvenc_context_cache_init = 0:10:5

Contexts are used as:

- decoding: 5 for FullHD

- encoding: 5 for FullHD + 5 HD (720p)

Tesla M60 (16 GB, 2 GPUs)

Read

Stress-testing NVidia GPU for live transcoding article to see full testing procedure. The final numbers are as follows.

FullHD, high profile

Maximum number of decoding contexts is 20 for each GPU. So if you want to use GPU for decoding you can use

nvenc_context_cache_init=0:0:20,0:0:20

To use NVENC for both encoding and decoding we recommend this value:

nvenc_context_cache_init=0:30:15;0:30:15

This means each GPU has 30 encoding and 15 decoding contexts.

Each GPU is used as as:

- decoding: 15 for FullHD

- encoding: 15 for HD (720p), 15 for 480p

HD, high profile

For HD we recommend:

nvenc_context_cache_init=0:23:23,1:23:23

Each GPU is used as:

- decoding: 23 for HD

- encoding: 23 for 480p

We'll add more cases as soon as we perform proper testing. If you have any results which you'd like to share,

drop a note to us.

Troubleshooting

If you face any issues when using Live Transcoder, follow

Troubleshooting Live Transcoder article to see what can be checked and fixed.

If you have any issues which are not covered there,

contact us for any questions.

Related documentation

NVIDIA, the NVIDIA logo and CUDA are trademarks and/or registered trademarks of NVIDIA Corporation in the U.S. and/or other countries.